One branch to rule them all | guided series #1

A lot of us deploy our apps to multiple cloud environments (or soon will). When I had to do that myself, I faced many questions, with the main one being: where do I even start? Let me show you.

Hey buddy, glad you’re here! 👋🏼

My goal for this short series is very simple: teach you by example. Together, we’ll go through a full process I follow to solve various problems:

🔍 gather and understand requirements

🧠 brainstorm solutions

🎯 scope

👨🏻💻 implement & test (iterate until convergence)

🛑 stop (sounds easy? :p)

Today, I’ll describe the first two stages–we’ll gather and understand the requirements, and brainstorm various solutions. Next time, we’ll implement some of them.

What will we be working on this series?

🤔 PROBLEM DEFINITION

How to deploy an app to multiple environments so that each env can run a different version of the application?

Roll up your sleeves, it’ll be fun!

🔍 Gather and understand requirements

Let’s decompose THE PROBLEM a bit:

App has to be deployed. It means it’s either a web app, or e.g. a backend service like an API. Roger that.

It should be possible to deploy the app to multiple different environments/targets (like staging, production, test, etc.)

Every environment can run a different version of the app–we need a way to:

configure the env to run a specific version of the app, which induces 👇🏼

version the application itself

🚨 Disclaimer:

Here, app may refer to any application you’re building or planning to build. It doesn’t really matter what it is in particular–what we’re building here will be flexible enough to handle multiple different use cases. However, there is one quite strong assumption I’m going to make:

There CAN’T be multiple versions of the application deployed to a single environment at the same time.

One more thing, not explicitly stated in the aforementioned problem definition: when it comes to git flow and branching strategies, the project must be easy to maintain. What I mean by that contributing to it shouldn’t make devs’ lives too complicated.

There must be a main branch to which everything gets merged. Feature branches should be used to work on new functionalities, but they aren’t allowed to live for too long (max several days). Last but not least, devs shouldn’t have to perform multiple merges between long-lived branches.

Okay. Now, let’s gather all of the above into a list of requirements:

Git flow must be a no-brainer–we don’t want to deal with multiple branches that will have to be frequently synchronized with one another.

Application needs to be versioned.

It must be possible to deploy a required version of the application to the selected deployment environment.

Every environment runs only one version of the application at the same time.

We could (and maybe even should?) stop here. But I want to challenge us even further by introducing one extra requirement:

Automate manual processes to a reasonable extent.

Don’t be surprised. Remember, you’re reading the too long; automated blog!

List of requirements is a definition of done for the first stage of the process. It is also the input for the stage no. 2, which is… brainstorming 🤯 Warm up your brain, buddy.

Got any questions so far? Feel free to leave a comment, I’ll get back to you promptly.

🧠 Brainstorm solutions

📋 req #1: No-brainer git flow

I believe there’s no one-size-fits-all approach, so let’s explore various git branching strategies that could be used in our project. I’ll walk you through three techniques, ordered from the least complex to the most complex one.

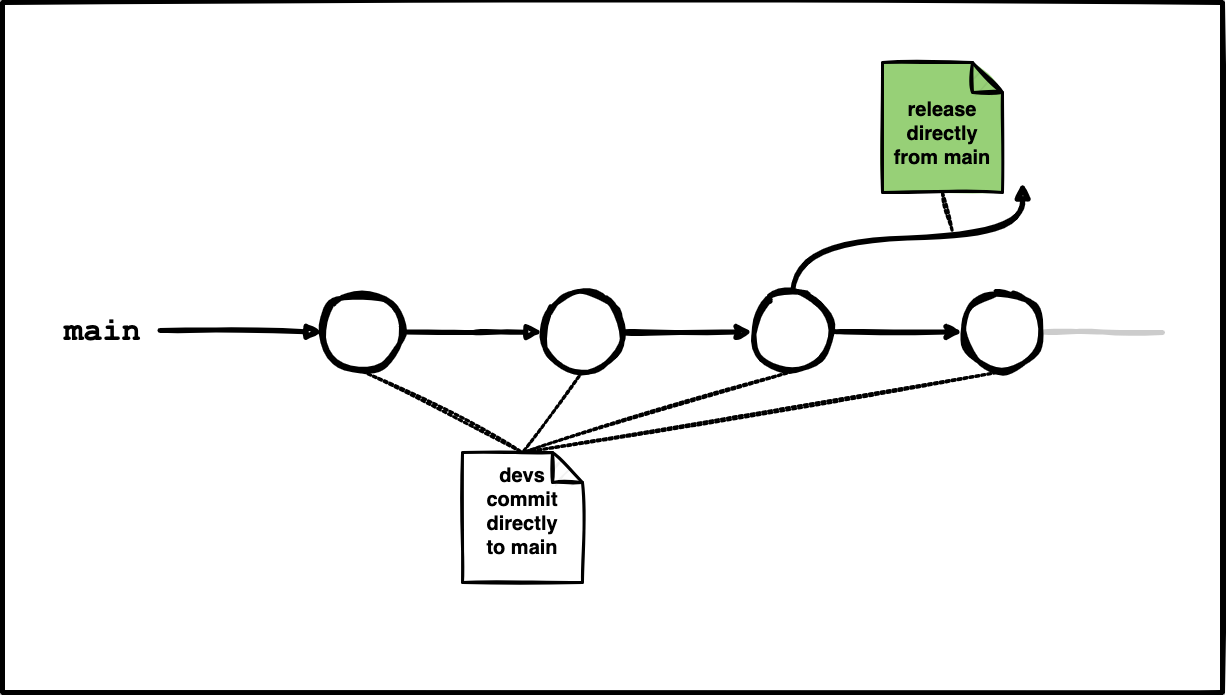

👉🏼 option #1: pure trunk-based development

Complexity: 🔵 ⚪ ⚪

Versatility: 🔵 ⚪ ⚪

Team size: solo 👤 or small team 👥

I’d say that in its pure variant (no feature branches at all), trunk-based development is a bit hardcore. It assumes there exists only a single primary branch called main (a.k.a the trunk) that devs are supposed to directly push to. No feature branches, no develop branches, no release branches, nothing. Just the main branch. Of course with some safeguards in place!

🛡️ Safeguard: Once the commit gets pushed, it automatically triggers the build process as part of the continuous integration workflow (at least that’s what should be in place so that contributors can sleep peacefully). If it fails, the commit gets instantly reverted to ensure the trunk never gets broken.

As you can probably guess, pure trunk-based development (no additional branches allowed) can work only in small teams of, say, three to four devs at max.

This approach may be tempting in relatively simple scenarios as it really is a no-brainer branching strategy. However, note that it doesn’t allow for code reviewing process, and to be honest, this is what makes it a no-go for me.

I’m a huge supporter of having the code go through reviews in PRs or MRs–these serve multiple purposes like improving code quality, sharing good practices across team members, spreading knowledge, and generally be on one’s toes when it comes to understanding what’s happening in the project.

Can we do better than having just the trunk that we push directly to, but without complicating the development flow too much?

I’m convinced we can.

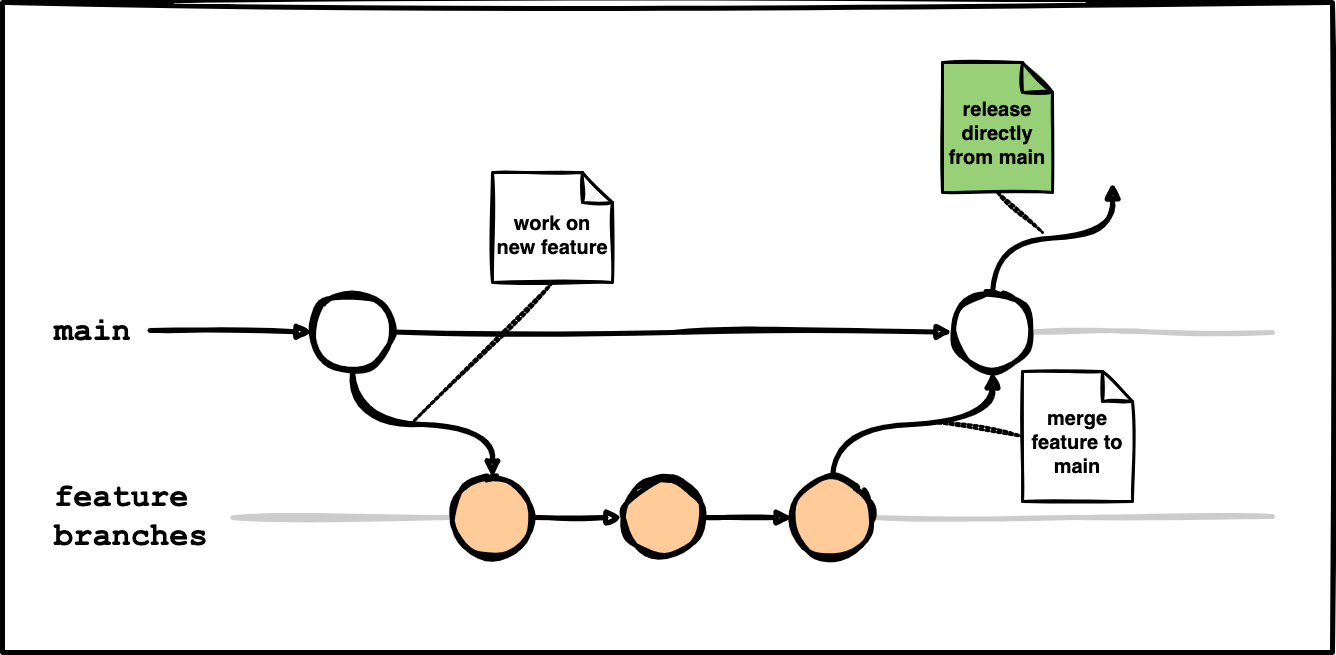

👉🏼 option #2: permissive trunk-based development

Complexity: 🔵 🔵 ⚪

Versatility: 🔵 🔵 ⚪

Team size: small 👥 or large 👥👥 teams

After reading multiple posts and discussions about the trunk-based development, I was often left with a feeling of a huge misunderstanding among the participants. Let’s look at one of the comments that nailed the context:

I’m curious what’s your take on this, dear reader.

If you would like to elaborate a bit, commenting is best you can do atm:

Personally, I tend to agree with what emerges from the discussions online:

We should avoid having long-lived branches other than trunk.

Let’s think of a more permissive trunk-based development flow. Simple, functional, but not simplistic: project with the main branch (trunk) and short-lived feature branches (max several days). It’s a no-brainer git flow (more like GitHub flow than the original git-flow) that will keep your branching logic as simple as possible, while allowing for more advanced use cases, plus smooth collaboration in larger teams.

In this strategy, it is okay to create short-lived feature branches to work on new features. Once the feature is ready, a pull request to the main branch should be opened. After all discussion threads are successfully resolved and optional automated workflows like linters, type checks, or unit tests pass, it is fine for the code to be merged. Merge can trigger additional steps like docker image building, tagging, etc.

The main difference here is that it is possible to work on multiple features that are developed by larger teams, while still avoiding merge hell and a need to synchronize multiple long-lived branches.

Definitely something worth having a closer look at.

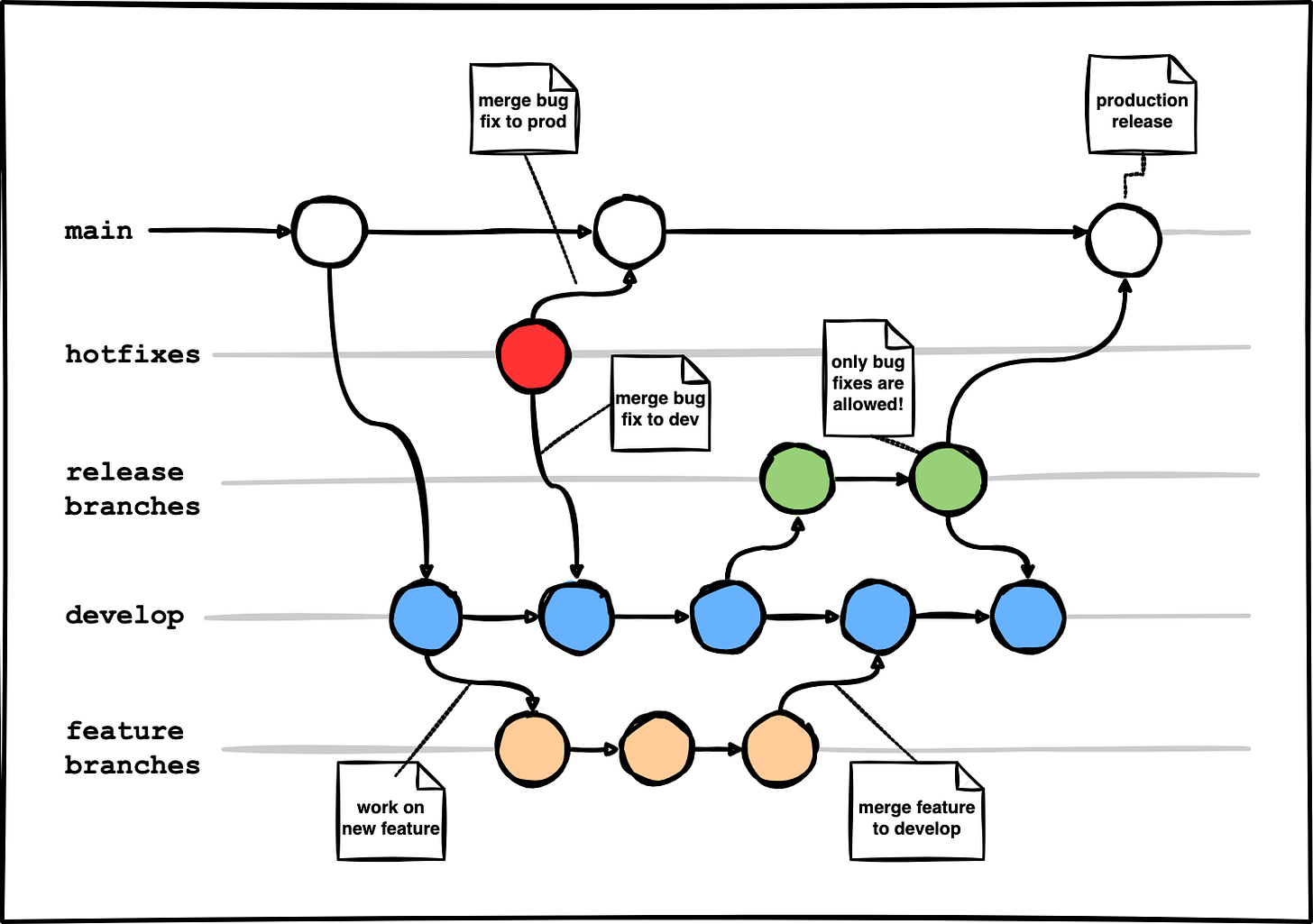

👉🏼 option #3: git-flow

Complexity: 🔵 🔵 🔵

Versatility: 🔵 🔵 🔵

Team size: large teams 👥👥

Original git-flow, introduced in 2010 by Vincent Driessen in this article, uses multiple branches in its branching strategy: main, develop, and supporting branches (feature, release, and hotfix).

🚫 Complexity: the flow is quite complex as it requires frequent branch synchronization. This happens whenever hot fixes are introduced–these have to be merged back to both develop and main branches.

If merging comes with conflicts, this may turn into nightmare.

✅ Versatility: git-flow may come handy if you work in larger teams that have strict release requirements. Additionally, release branches help maintain multiple versions of the applications (including multiple versions deployed to a single environment like production). But all comes with a price, here it being the complexity.

10 years after the first post introducing git-flow, Vincent Driessen added a commentary with his reflection on the topic of a git-based development:

[web apps] is not the class of software that I had in mind when I wrote the blog post 10 years ago. If your team is doing continuous delivery of software, I would suggest to adopt a much simpler workflow (like GitHub flow) instead of trying to shoehorn git-flow into your team.

If, however, you are building software that is explicitly versioned, or if you need to support multiple versions of your software in the wild, then git-flow may still be as good of a fit to your team as it has been to people in the last 10 years.

📋 req #2: Application needs to be versioned

There are at least two versioning approaches that come to me instantly: tagging specific version of the code using git tags and containerizing the application, leveraging container tags to distinguish what version of the app is inside the image. There are also other solutions like creating versioned packages in languages like JS and Python, but I’d like the tutorial to be as much language-agnostic as possible, so I’m going to exclude packages from further analyses.

👉🏼 option #1: git tagging

Git tagging is simple and reliable. It has several advantages:

✅ It’s easy to checkout to any version of the code when needed.

✅ It’s based on git, which is already being used by almost everyone in the IT industry. It means using git tags won’t add any new dependency to our project. Nice.

Git tagging has one drawback, though:

🚫 The tag is assigned to all of the code in the repository, not just to a single directory with the application.

This may not sound like an issue, but imagine your project is a monorepo with the source code of multiple applications, each stored in a separate subdirectory that should be versioned separately. How do you know which app got tagged in that specific git tag you checked out?

Sure, you could add prefix to the version tag and end up with something like app1-0.1.0, app2-1.2.4, etc. The tags would still describe the entire code in the monorepo, but you would know which app directory to look at based on the tag name. Personally, I find this approach useful to a certain degree.

The aforementioned drawback doesn’t sound harmful at first, but there’s one more perspective I believe I should mention here: code isolation.

We’ll soon discuss deployment configuration strategies, but at this point it’s important to remember that it’ll be required for the deployment platform like VM1 or Kubernetes2 to run the selected version of the application. Using solely git tagging mechanism requires checking out to a specific tag, meaning the whole repository will be checked out.

🙅🏻♂️🙅🏻♂️ I wouldn’t do it, neither in monorepo, nor in the one-repo-per-app case.

Why?

Because I don’t want app2 deployment to include any code that is not strictly required by this app to run. I don’t want app1’s source code to be there. Not to mention the utilities like readmes, changelogs, etc.

Plus, it is simply dangerous–it potentially exposes entire codebase of the project if any deployment gets hacked.

Okay, I hope you now understand that we need an approach that will allow us to deploy only the code used by the application when deploying its specific version.

Containers come really handy in such situations.

👉🏼 option #2: containers with tagging

There are so many benefits of containerizing the app that it’s hard to list them all:

✅ isolating code into a self-contained entity

✅ tagging images enables versioning of their content

✅ encouraging good dev practices

✅ reducing the risk of the famous “works for me” situation3

✅ anything that supports containers can be used as the deployment platform

Introducing the concept of containerization to your project will increase its complexity a bit, but it’s worth every additional second invested.

Why?

Well, I think it’s mostly because it requires the developer to make a dump of the environment configuration that is needed to run the app in a form of e.g. a Dockerfile. Providing Dockerfile alone serves as an invaluable piece of documentation, and since I strongly believe in the self-documenting code, I’d recommend doing this.

📋 req #3-4: Configurable deployment targets

There’s one thing we need to keep in mind here: our configuration method must make it possible to specify one app version per deployment environment. Nothing less, nothing more.

👉🏼 option #1: config files

One of the most popular ways to configure stuff is to use configuration files. Shocker.

Jokes aside, configuration files are a remarkably simple, yet powerful solution to this problem. I can easily imagine a directory in your project called config that would have a subdirectory called after every deployment target you have. In these subdirectories, you could put a simple .env/YAML/whatever-format-you-choose file (one per env) that would have every required variable defined in it. If these files were to store secrets, they could simply be encrypted with something like sops and be decrypted on the fly during the CI/CD process.

Here’s what the configuration directory tree could look like:

config/

├── production/

│ └── .env

├── staging/

│ └── .env

└── test/

└── .env👉🏼 option #2: helm charts

Helm is a package manager for Kubernetes. It lets you create, version, and publish your Kubernetes applications in a flexible, standardized format.

It’s pretty dope, but choosing helm requires us to assume that the deployment platform for our application will be solely Kubernetes, which is not necessarily true.

This is why I’m going to exclude it from our brainstorming session.

👉🏼 option #3: branch-based deployment

In branch-based deployment, deployment targets are associated with specific branches–whenever changes get pushed, a single environment is updated by its dedicated CI/CD workflow. Simple.

There still needs to be a mechanism that would allow us to use different variable values per each deployment target, though. There are at least several ways to achieve this, one of them being GitHub Environments or something similar. It lets you define a set of variables/secrets that are assigned to a specific deployment environment. CI/CD workflow then uses environmental variables that are substituted with secrets’ values from the suitable deployment target.

Env-specific secrets could also be stored in cloud secrets managers like AWS Secrets Manager or Google Cloud’s KMS. The way they work is virtually identical, yet requires additional configuration and permissions to connect to the cloud environment and retrieve required values from there.

There’s one more way to achieve env-specific configuration: having separate CI/CD workflows defined for every deployment target. Drawback here is that this approach may require quite a lot of copy-pasting and extra maintenance efforts when the same part of the workflow needs to be updated across multiple targets. The advantage, on the other hand, is that it doesn’t necessarily require us to have per-env branch in the repository, which is nice.

Wow, it must have taken a while to go through all of the content above, buddy.

Congratulations! 🙌🏼

In the next post, I’ll guide you through the scoping stage to select one option for every requirement from our list. Then, we’ll dive into the implementation and testing. And then, we’ll stop.

If you have any thoughts/suggestions you’d like to share, leave a comment below.

Thanks, buddy.

Stay tuned! 🙌🏼

e.g. Compute Engine in GCP or EC2 in AWS

e.g. GKE in GCP or EKS in AWS

Although the introduction of ARM-based docker images reintroduced this fear: some external dependencies like Python libraries may not be arm-compatible yet.